The DeepSeek R1 model from a Chinese team has rocked the AI industry. It has overtaken ChatGPT and achieved the top position on the US App Store. Not just that, DeepSeek has rattled the US tech stock market with its groundbreaking R1 model, which claims to match ChatGPT o1. While you can access DeepSeek R1 for free on its official website, many users have privacy concerns as the data is stored in China. So if you want to run DeepSeek R1 locally on your PC or Mac, you can do so easily with LM Studio and Ollama. Here is a step-by-step tutorial to get started.

Run DeepSeek R1 Locally with LM Studio

- Download and install LM Studio 0.3.8 or later (Free) on your PC, Mac, or Linux computer.

- Next, launch LM Studio and move to the search window in the left pane.

- Here, under Model Search, you will find the “DeepSeek R1 Distill (Qwen 7B)” model (Hugging Face).

- Now, click on Download. You must have at least 5GB of storage space and 8GB of RAM to use this model.

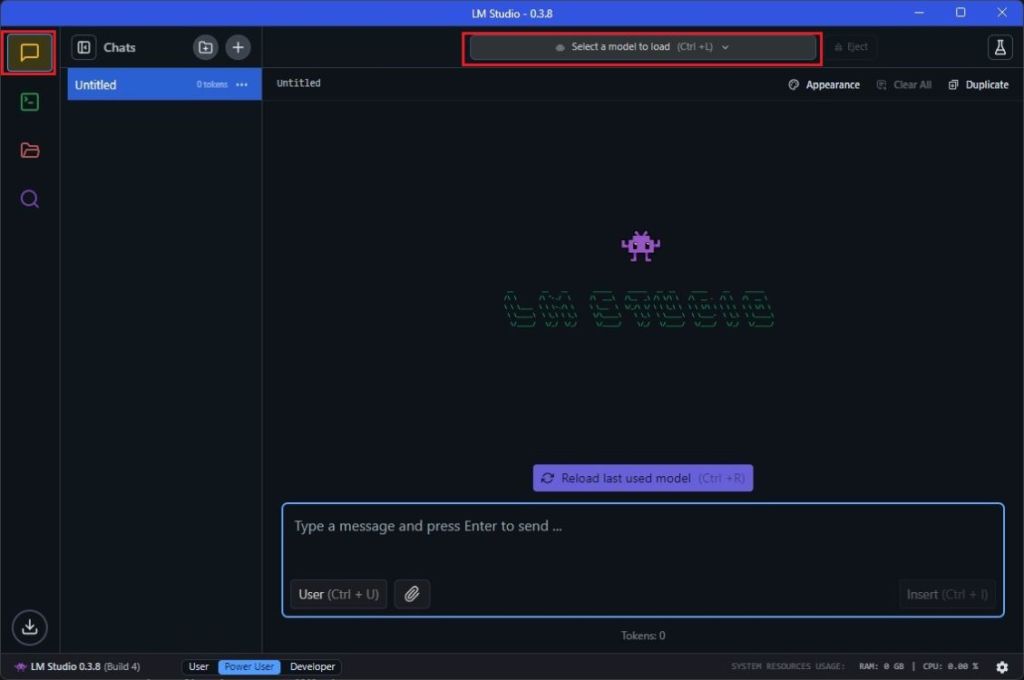

- Once the DeepSeek R1 model is downloaded, switch to the “Chat” window and load the model.

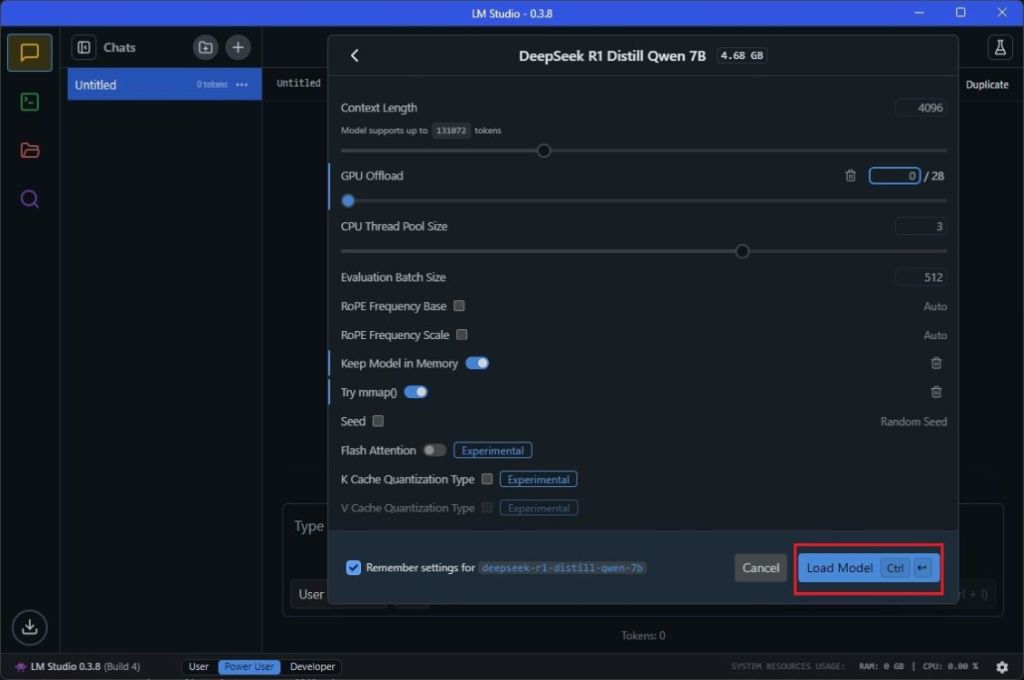

- Simply select the model and click the “Load Model” button. If you get an error, reduce “GPU offload” to 0 and continue.

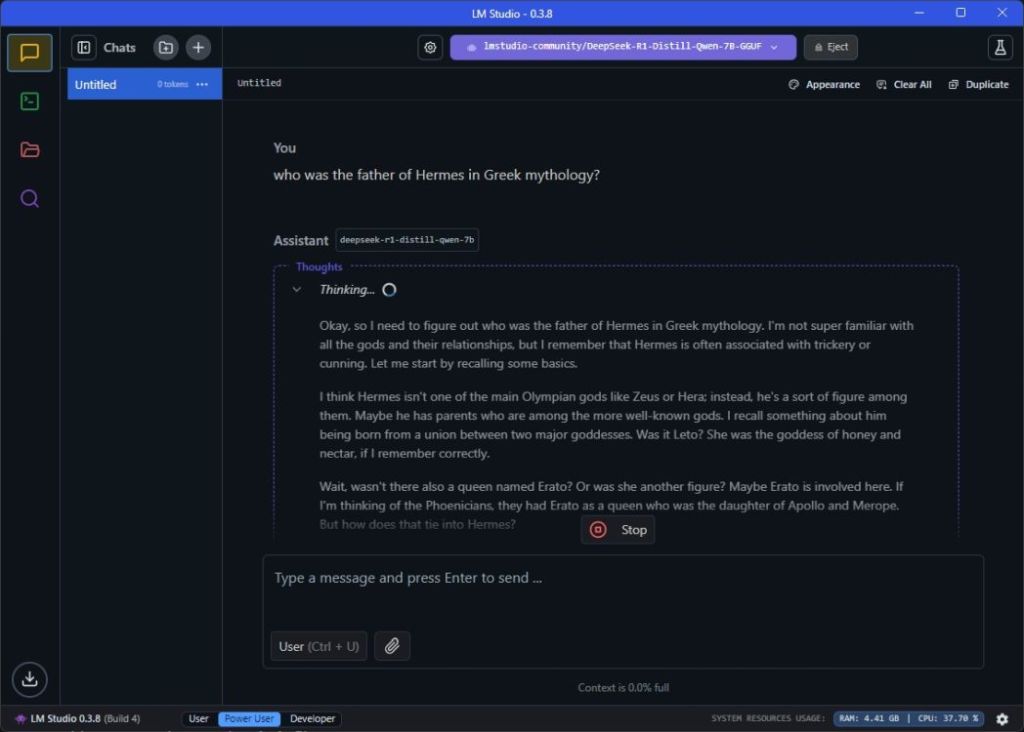

- Now, you can chat with DeepSeek R1 on your computer locally. Enjoy!

Run DeepSeek R1 Locally with Ollama

- Go ahead and install Ollama (Free) on your Windows, macOS, or Linux computer.

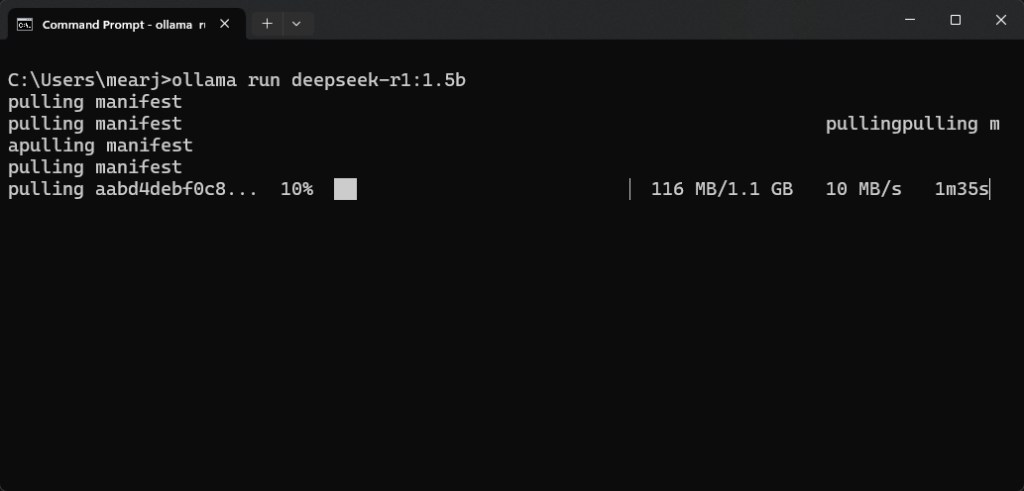

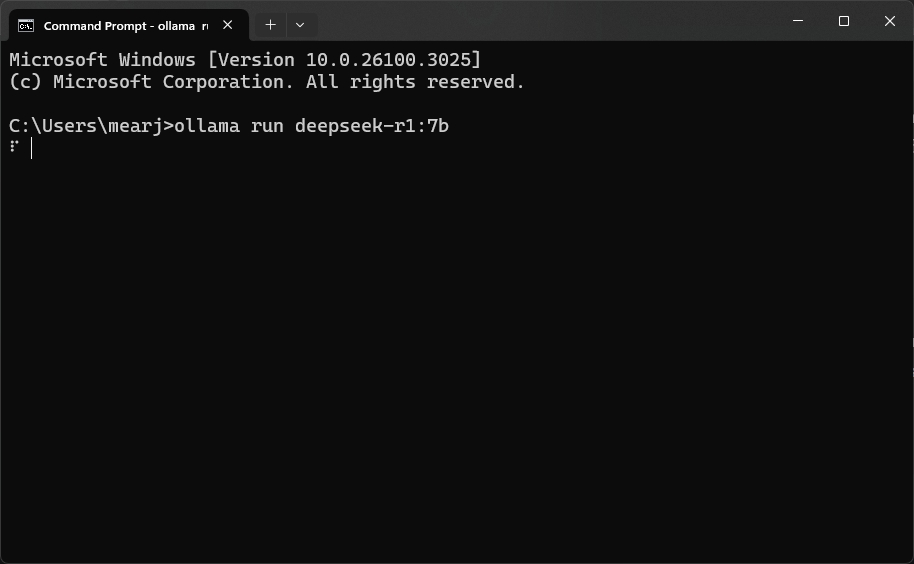

- Now, launch the Terminal and run the below command to run DeepSeek R1 locally.

- This is a small 1.5B model distilled from DeepSeek R1 and based on Qwen for low-end computers. It only uses 1.1GB of memory.

ollama run deepseek-r1:1.5b

- If you have a large pool of memory with powerful hardware, you can run 7B, 14B, 32B, or 70B models distilled from DeepSeek R1. You can find the commands here.

- Here is the command to run the 7B DeepSeek R1 distilled model on your computer. It uses 4.7GB of memory.

ollama run deepseek-r1:7b

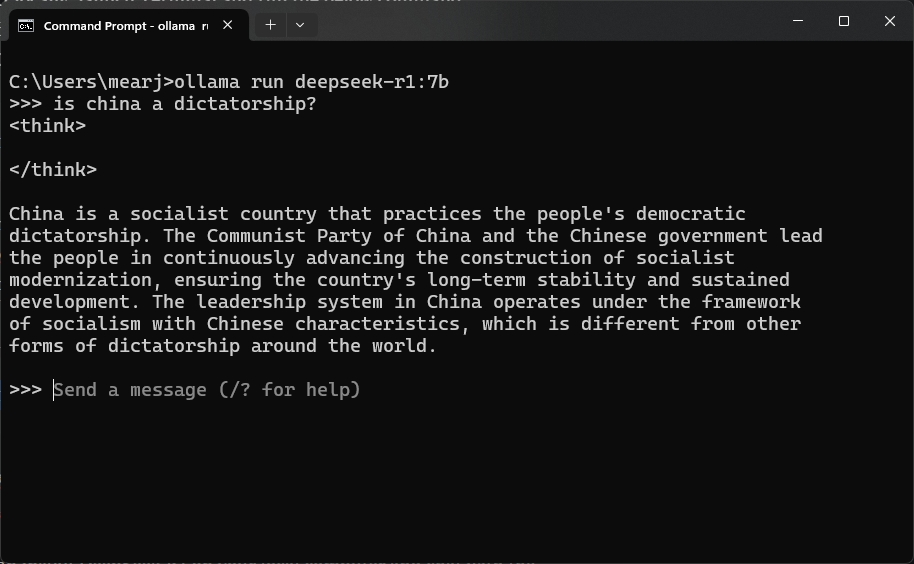

- Now, you can chat with DeepSeek R1 locally on your computer, right from the Terminal.

- To stop chatting with the AI model and exit, use the “Ctrl + D” shortcut.

So, these are the two simple ways to install DeepSeek R1 on your local computer and chat with the AI model without an internet connection. In my brief testing, both 1.5B and 7B models hallucinated a lot and got historical facts wrong.

That said, you can easily use these models for creative writing and mathematical reasoning. If you have powerful hardware, I recommend trying out the DeepSeek R1 32B model. It’s much better at coding and grounding answers with reasoning.

Source: Beebom