The AI craze is such that the Raspberry Pi Foundation released a new AI Hat+ add-on recently. That said, you don’t need dedicated hardware to run AI models on Raspberry Pi locally. You can run small language models on your Raspberry Pi board using the CPU. The token generation is definitely slow, but there are small million-parameter models that run decently well. On that note, let’s go ahead and learn how to run AI models on Raspberry Pi.

Requirements

- A Raspberry Pi with at least 2GB of RAM for a decent experience. I am using my Raspberry Pi 4 for this tutorial with 4GB of RAM. Some users have even succeeded in running AI models on a Raspberry Pi Zero 2 W with just 512MB of RAM.

- A microSD card with at least 8GB of storage.

Install Ollama on Raspberry Pi

- Before you install Ollama, go ahead and set up your Raspberry Pi if you have not done that.

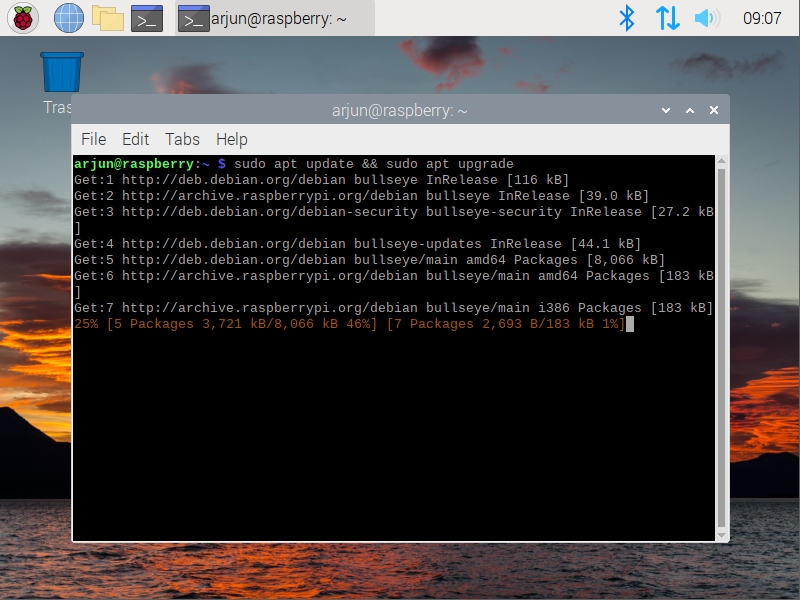

- After that, launch the Terminal and run the below command to update all the packages and dependencies.

sudo apt update && sudo apt upgrade

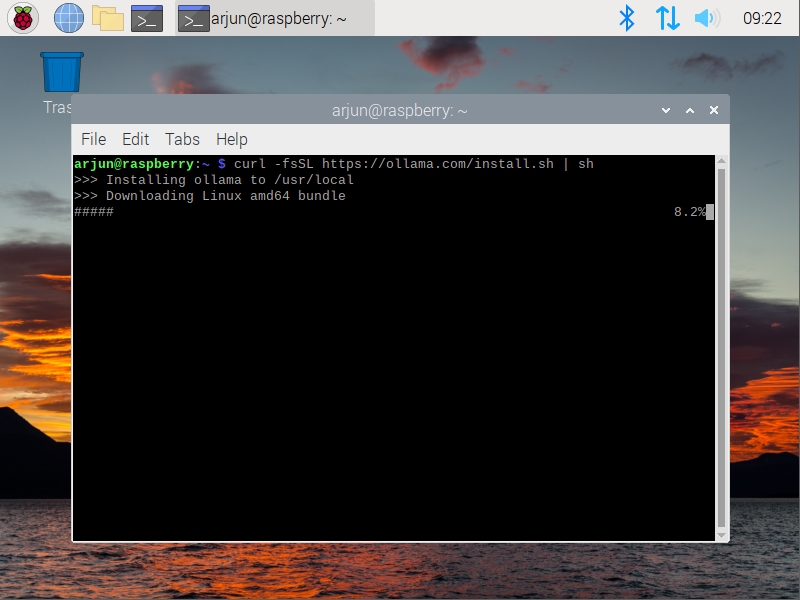

- Now, run the below command to install Ollama on your Raspberry Pi.

curl -fsSL | sh

- Once Ollama is installed, you will get a warning that it will use the CPU to run the AI model locally. You are now good to go.

Run AI Models Locally on Raspberry Pi

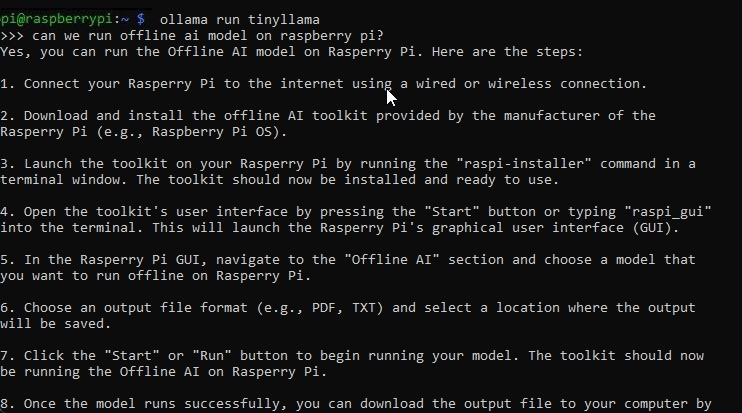

- After installing Ollama, run the next command to install the tinyllama AI model on your Raspberry Pi. It’s a small 1.1 billion model and uses only 638MB of RAM.

ollama run tinyllama

- Once the AI model is installed, enter your prompt and hit Enter. It will take some time to generate a response.

- In my testing, the Raspberry Pi 4 generated responses at a slow speed but that’s what you get on a tiny single-board computer.

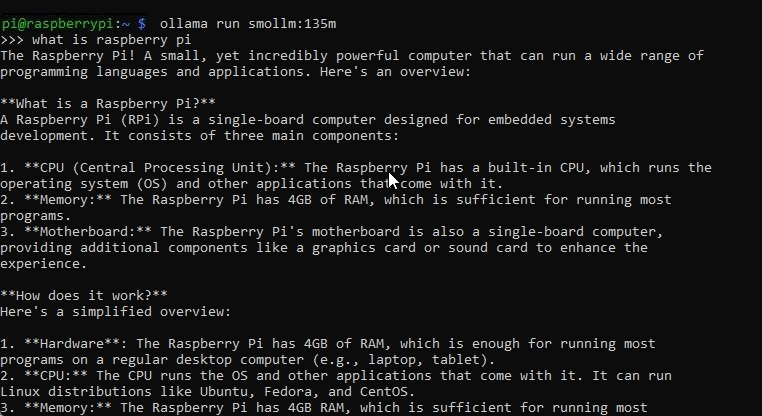

- I would recommend running smollm which has 135 million parameters and only consumes 92MB of memory. It’s a perfect small LLM for Raspberry Pi.

ollama run smollm:135m

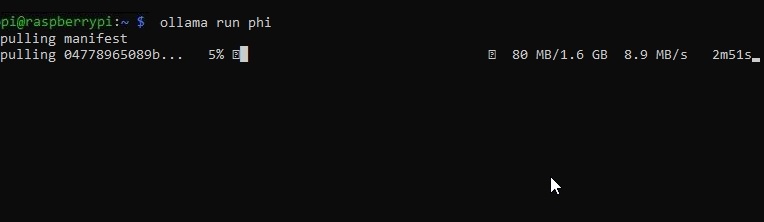

ollama run phi

So this is how you can run AI models on Raspberry Pi locally. I love Ollama because it’s straightforward to use. There are other frameworks like Llama.cpp, but the installation process is a bit of a hassle. With just two Ollama commands, you can start using an LLM on your Raspberry Pi.

Anyway, that is all from us. Recently, I used my Raspberry Pi to make a wireless Android Auto dongle, so if you are interested in such cool projects, go through our guide. And if you have any questions, let us know in the comments below.